From carbon absorber to emitter: monoculture, fires, disease and storms are reversing the European forest’s natural role as a Co2 sink. Read about the forests that threaten climate neutrality.

How do we learn to see? And how do we learn to be seen? Scientific theories layered over centuries, as intellectual history, have shaped and directed perceptions of vision. Contemporary studies on the evolution of sight in children gauge what an increasing dominance of screen visuals is teaching us.

Sight has long been viewed as humankind’s primary and most noble sense. It has left traces in our language, just as in the preceding sentence: to view something is both to consider and to look at something. Another example: to say that I want to scrutinize something – understand something that’s not directly visible to my eye – is everyday speech and doesn’t sound particularly literary or allegorical. Of course, you can get a ‘feel’ or a ‘taste’ for something, you can ‘approach’ a question or ‘listen’ to an argument. But sight remains the primary metaphor for abstract mental activity – a metaphor long since buried in everyday language, almost invisible. See!

Is this, then, the natural order of things? People who seek to emphasize sight’s dominant role tend to point to the fact that more than half of the nerve cells in the cerebral cortex process visual information. Then again, more recent research has toned down the notion that cognitive functions have precise locations in the brain, showing instead that senses often coordinate amongst themselves to help us understand the world. Understanding a visual impression doesn’t just entail decoding the light that falls into the eye. It also requires pairing this information with the eye muscle’s sideways movement, the hand’s turning of the object, and the body’s relative position in the room – not to mention semantic and affective patterns that are continuously activated and reshaped. A few black stains on a sheet of paper might be incomprehensible until we move our gaze to the word printed beneath the image, at which the letters B, E and E immediately allow us to see the contours of an insect. We were initially and momentarily blind, unable to interpret the impression presented to the eye, until we discovered the word, and the word made us see.

The understanding of sight as primary and exalted has old roots. More than two thousand years ago, Plato (b. 428 BC) wrote about the eye as a sun glowing with the light of reason. Aristotle (b. 384 BC) argued that sight is the sense best equipped to help us understand the world since it most clearly reveals distinctions. We want to know what separates objects and discover the boundaries between phenomena. Sight also offers a familiar scientific ideal by providing distance: being able to observe something from afar, not having to get into direct contact and touch, or be touched. Although sight initiates in two small orbs in the face, it is wide-reaching. While touch, which covers the entire body, is intimate and has a pitifully short reach. It is touch, however, that is the first sense to develop in the human foetus: receptors for touch grow early across the lips of the embryo. But how do we begin to see?

In the beginning there is water, where a translucent grain of slime is suspended. This grain, which is one, splits, after a few hours, into two. In the coming days, it will split again, into four, eight, sixteen and, ultimately, billions. The word cell means ‘small room’; the evolution from slimy grain into an eye is, indeed, the story of infinite rooms created out of nothing. The clump of cells begins to shapeshift as parts of the slime push outward, so that a hole is created in the middle. A collection of holes fold in on themselves, making yet another empty space. This opening ultimately becomes the mouth – though for some creatures, like the sea urchin, it evolves into an anus. That’s all there is to life for some animals: they learn to swallow and shit. For those that will at some point learn to see, the process continues.

Inside the morphing shape a slit turns into a tube, which becomes the spine and the nervous system. From the blister that grows on top, two thin stems shoot out toward the unified surface that will become skin, and as if the surface knew – as if it, too, desired this – it sinks down a bit; stem and bowl meet. The bowl keeps sinking until it comes loose from the surface and turns into a ball, which rests in an indentation on top of the stem. In the outermost layer of the ball, cells create a white, hard film, but in one smaller area they become transparent. This glass-like surface can have no blood vessels, as blood would cast dark shadows and hinder light reaching the layer of cells deep within the inner ball, the retina.

All these parts prepare in the dark – independently but in synchrony – for the day they will see the light. They are fragments, yet also a totality; completely inexperienced, yet driven as if by intuition. The optic nerve, emanating from the strange ball, fills with hundreds of thousands of thin fibres every minute, getting ready.

Then, birth. For the first time, light pours in through the black hole of the pupil. It gets refracted through the lens, landing on the retina’s light-hungry cells, where one type of energy transforms into another: a chemical reaction turns light into an electrical nerve impulse sent back along the optic nerve to the brain. A transfer: patterns in one domain are moved to another – like a metaphor.

Baby Eye Brain, Paul Insect, London. Image by bixentro via Wikimedia Commons

The lens is not perfectly calibrated at birth: it’s round, rather than convex, and the retina isn’t complete; a newborn’s sight is blurry. Nevertheless, as early as the first day after birth, an infant begins to follow the rules put together by scientists, observing infant gazing behaviour. In the absence of stimuli, babies start searching their surroundings. They scan widely, until they come upon a line, or a border, and pause there.

Some periods are critical for the evolution of sight. If light doesn’t reach the eye in the first months of life, it won’t be able to see later, as shown in a series of experiments from the 1960s that would be considered unethical and cruel today. Researchers studying the development of sight sewed one eye of kittens shut shortly after birth to assess the impact of early sight deprivation. After three months the cats couldn’t see when their eye was reopened. In contrast grown cats didn’t become blind even if an eye was closed for a long time in older age.

In 1588, philosopher William Molyneux, whose wife was blind, posed a question to the philosopher and physician John Locke: would a blind person, who had learned about geometric shapes using only her hands, be able to recognize a cube using her eyes if she miraculously could see? Locke’s said she wouldn’t. His answer was verified thirty years later after the much-discussed cataract surgery on a 13-year-old boy (an experiment repeated many times since). The boy, who had previously been blind, regained his sight but wasn’t able to identify the cube using sight alone. But when he touched it and associated the visual phenomenon with the feeling from his hand, he learned to see the cube as a cube.

In research on babies and gaze patterns, age-specific preferences have been discovered. At three months an infant prefers looking at red and yellow objects, at six months at things that fall, at nine months at faces. A constant, unchanged by age, is a preference for new stimuli. In parallel and in interaction with these preferences, the child trains her abilities: to steer the movements of the head, to move things from one hand to another, to pick up small objects with the fingers, to associate the visual event of seeing a breast or a bottle with the physical event of being fed. The child begins to look for things that have been hidden, moves closer to things that are of interest, imitates the movements of others, and begins to sort colour and shapes according to likeness. She begins to develop a new preference for causality and correlation.

Humankind’s innate desire to seek causality and correlations led to early attempts to understand how sight works. Democritus (b. 469 BC) imagined very thin films of atoms, which he called eidola, constantly shedding from objects and making their way into the eye, where they collided with the atoms of the soul. Lucretius (b. 99 BC) had similar theories but called the imagined films simulacra, writing: ‘since amongst visible things many throw off bodies, some loosely diffused abroad, as wood throws off smoke and fire heat, sometimes more close-knit and condensed, as often when cicadas drop their neat coats in summer, and when calves at birth throw off the caul from their outermost surface, and also when the slippery serpent casts off his vesture amongst the thorns.’ The idea that vision is created by something entering the eye is usually called intromission theory. Critics of the idea questioned how the eidola of an entire mountain could fit inside an eye, and how all these films could avoid getting tangled up on their journey.

A more common belief, known as emission theory, centred on the eye emanating its own light. While most proponents of this paradigm believed in a combination of light from inside and outside the eye, the one-directional emission theory is often ascribed to Empedocles (b. 490 BC) and his description of a divine fire in the eye. Given that only fragments of Empedocles’s writings remain today, second-hand accounts from later philosophers, who argued against the theory of pure emission as the example of a theory worse than theirs, complicate his claims. From a modern perspective, it seems obvious that the eye doesn’t have its own light – wouldn’t we otherwise be able to see in the dark? But inspiration for this theory may have been drawn from the eyes of nocturnal animals that reflect light and appear to glow in the dark. Plato, among others, introduced ideas leaning more on interaction, arguing that a divine light originating in the eye would need to encounter an external light. Aristotle, on the other hand, didn’t believe that something emanated from eye nor objects, but that the eye somehow transformed the light in between subject and object into a medium for sight.

These theories had multiple proponents, from those interested in not only psychology and perception but also medicine and mathematics. The Greek surgeon Galen (b. 129), who dissected baboons and attended wounded gladiators, was among the first to give an anatomical description of the parts of the eye. He thought that sight happened in the lens, a belief that was widespread until the seventeenth century. Following an anatomical description of the eye in De Usu Partium (On the Usefulness of the Parts of the Body), Galen writes that he hesitated to speak about the direction of light ‘since it necessarily involves the theory of geometry and most people pretending to some education not only are ignorant of this but also avoid those who do understand it and are annoyed with them.’

Euclid (b. 325 BC) might have been a typical source of such irritation. Uninterested in the eye’s physical characteristics, he restricted his attention to the rules of mathematics that might explain sight. Perhaps influenced by the geometry that explained how an amphitheatre stage was visible to as large an audience as possible, he developed a conical model of the field of view, suggesting that a visible object needs to reach the eye along straight, uninterrupted lines. Euclid, despite being among those who wrongly believed that the eye emanated light, did achieve a solid mathematical theory. Ptolemy (b. 90) later modified Euclid’s cone model in a text that was translated into Arabic, along with works by Aristotle and Galen in the nineth century; these texts became greatly influential on the various works on optics written in the Middle East over centuries. These, in turn, constituted the foundation for advances in optics in the European Middle Ages and the Renaissance.

The Bagdad philosopher al-Kindi (b. 800), a leading translator from Latin and writer of works about shadows, mirrors and the sky’s colour, defended the emission theory in relation to the eye’s shape. Noting the ear’s obvious conical shape for receiving sounds, he surmised that the eye, being spherical and mobile, directed its light. The person who would ultimately gather all earlier theories to create a great synthesis, and the basis of our contemporary understanding of sight, was another Iraqi philosopher: Ibn al-Haytham (b. 965). Having failed to satisfy the khalif’s orders to dam up the Nile, al-Haytham wrote his treatise on the sense of sight while on house arrest in Cairo. He noted that light affects the eye in several ways: the pupil can contract, strong light can damage the eye and we see an after-image on the inside of our eyelid after staring at the sun. Concluding that sight must occur in the eye itself, he realized that emission was superfluous as an explanation – the rays that entered would have to come back out again somehow. Instead, he paired Euclidian geometry with his own ideas about how light bounces off surfaces and lands in the eye, through refraction and in a structured dot pattern. Johannes Kepler was greatly influenced by the translations of al-Haytham’s work, alongside studies by the anatomist Felix Platter (b. 1536), who highlighted the retina’s central role for sight in his 1604 work Astronomiae Pars Optica that once and for all confirmed the intromission theory.

Signs that emission theory isn’t fully dead are still evident today. In their article ‘Fundamentally Misunderstanding Visual Perception’, a group of psychologists from Ohio State University write that an unsettling number of adults believe something emanates from the eye in the process of seeing. The text is written in a humorous tone, but it is unusually judgmental. The authors are aghast that even psychology students give the wrong answer when asked if light leaves the eye. The authors’ indignation seems to grow into frustrated despair when the scientists attempt to design a test to coax participants to the right answer. They let the subjects read the course literature before they are quizzed and give a short lecture on the mechanics of vision, but nothing seems to work. The only intervention that sparks a change is when test subjects are shown a child-like, over-explicit animated clip stating, “NOTHING LEAVES THE EYE!”, underscoring that Superman’s x-ray vision isn’t real. Showing the film leads to a slight reduction in test subjects selecting the emission alternative, but their choice reverts when the test is repeated just a few months later.

Describing one research subject who ‘sheepishly’ admits that emission cannot be real if no-one can see the substance he stubbornly claimed leaves the eye, the authors consider that there might nevertheless be something legitimate in an experience of vision that feels like it is directed outward, toward the surrounding world. They seem to develop some wisdom despite, or perhaps because of, their bafflement, concluding that erroneous beliefs appear to coexist with scientifically accurate understandings in the same person without the contradiction ever becoming apparent to the subject.

There is something elusive about all these advances in explaining sight – something that’s constantly just out of reach for the scientist breaking new ground. Kepler’s realization that sight occurs in the retina paradoxically made sight even more opaque than before, moving it deeper inside the body. He was able to explain the mechanism up to the membrane, point to the upside-down image imprinted there, but at this point he threw in the towel. Somebody else would have to tackle the rest: whatever was happening in the nerves, how the image is turned upright and becomes what we see.

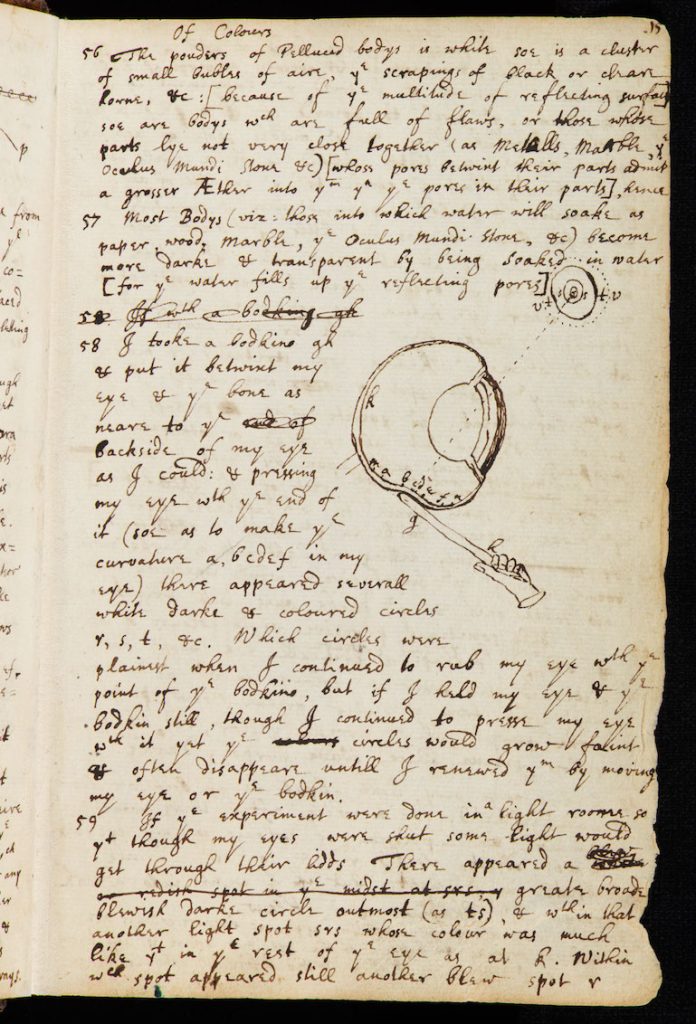

Image from Newton’s essay ‘Of Colours’ which illustrates the experiment in which he inserted a bodkin into his eye socket to put pressure on the eyeball to try to replicate the sensation of colour in normal sight. Image via Cambridge University Press

Isaac Newton (b. 1642) took a more practical approach to the question. Seeking to understand what aspects of his sight depended on the eye and what was dependent on the soul and the surrounding world, he experimented by pushing sharp tools past his own eye to bring about an experience of colour. Descartes, on the other hand, peeled off the back membranes of an oxen’s eye and held it up to the window like a light-sensitive film to show that the eye is like a passive camera obscura. But he referred the interpretation of these visual impressions to the imperfect, immaterial soul, which he assumed communicated in some undefined way with the pineal gland. It was as if he had proudly chanted MECHANICS, MECHANICS, MECHANICS, and then mumbled in embarrassment and a bit of magic. Scientists have continued to chase our elusive consciousness ever since.

In 1981 Swedish neurophysiologist Torsten Wiesel and his colleague David Hubel received the Nobel Prize for their discovery of how the brain deconstructs and reconstructs visual images. They found that certain nerve cells are activated when the eye sees vertical lines and others by seeing horizontal lines. Certain parts of the brain are dedicated to certain visual objects, like the fusiform face area, which is activated when we see faces and, if mechanically stimulated, can bring about a visual hallucination of a face. In a much-discussed Japanese study from 2012, scientists were able to guess people’s dreams after analysing their brain activity in the primary visual cortex the moments before they were awoken from sleep. Vision and dreams, it seemed, were getting closer to one another.

Before Kepler’s breakthrough and Descartes’s attempt to divide humans into mechanical flesh and an invisible, elevated soul, came a group of medieval philosophers often called ‘the perspectivists’ interested in light and sight, who were especially fascinated by optical illusions. In De oculo morali (On the Moral Eye), a best-selling manual for priests, astronomer Peter of Limoges (b. 1240) combined theories about vision with advice for good Christians. The perspectivists explored three kinds of vision: direct, broken and reflected (i.e., unencumbered vision filtered through air, vision distorted by materials with varying degrees of thickness, and vision through a mirror). De oculo morali turns these three categories into a metaphor for how man, as per the Bible, views everything reflected as through a mirror, whereas only God sees things directly and as they really are.

Optical illusions were used as teaching analogies: an object situated in denser material and viewed from a thinner material, like something in water seen from the air, appears to be larger than it actually is – just as the wealthy erroneously appear great and powerful in the eyes of the poor. The metaphors were, in the way of metaphors, brazenly malleable – as when the seven virtues were likened to the eye’s seven protective parts.

Mistrust in the human senses is a trope of religious literature. They are presented as cunning portals to sin but also endowed with the possibility of accessing the divine, which means they must be corrected and educated. Curiously exploring the senses without a higher purpose or goal is a sin; asceticism is a moral ideal. Lust is described as a particularly complicated sin: as opposed to gluttony, where the desired is a passive object, lust holds the potential to be multiplied by two meeting eyes. The Bible turns these terms inside out: ‘For now we see in a mirror dimly, but then face to face’; lying face to face might be the closest we can get to another person, or, as here, a vision of no longer being held prisoner by the body’s perpetually distorted gaze. The eye is an opening and a boundary; true understanding is both possible and impossible.

Religious architecture has long experimented with directing our gaze. Some medieval churches on the Swedish island of Gotland feature a small hole in the wall, sometimes shaped like a clover, sometimes covered by a beam that can be pushed aside. Hagioscopes are holes thought to have provided a glimpse of the alter for those who were not allowed to enter the church due to their sins or illness. Just as the gaze had to be educated and protected from sin, it could also be directed the right way: skyward, where the righteous are rewarded for their hard work with colourful church windows and vaulted ceilings – a dream of beauty entirely freed of sin.

Hagioscope, Saint-Maurice Church, Freyming-Merlebach, France. Photo by Jean-Marc Pascolo via Wikimedia Commons

A child learns to see and understand what she sees by categorizing her experiences. She identifies similarities, understands that this is a dog and that’s a dog too, but that thing over there is a cow. The parent helps the child’s categorization efforts by providing words. As the child amasses experiences, the more certain she will be in categorizing and stereotyping, and her misapprehensions will be fewer. What people like to call children’s imagination is actually a series of mistakes based on a lack of understanding – involuntary missteps from the child’s love of rules and order, her desire to know what’s what. The parent teaches clichés and the child learns to group fragments into predictable shapes. Anything else would be near impossible; an unpredictable parent scares the child and it would be inefficient, to say the least, to attempt creativity when teaching what belongs to the categories fruit or clothing.

Children aren’t educated by their parents alone but by their surroundings, too. And these surroundings are increasingly visual. They spend more and more time scrolling on screens instead of looking others in the eye. The screen is an efficient teacher, drawing both attention and the gaze, over and over. Driven by our preference for the new, we get stuck in a paradoxically repetitive flow of newness, flooded by fragments of visual impressions without a physical anchor. More than anything, the learning created by this visual flow might become habitual, a desensitization leading to indifference from having seen almost everything without necessarily having experienced much.

In his 1981 book, Simulacra and Simulation, Jean Baudrillard wrote about how our hyperreal era, characterized by images of images of images, is like an unstoppable, self-playing piano. The infinite number of reproductions easily get inside us and turn us ourselves into simulations, replicas.

Anyone who remains attached to Descartes’s view of the soul as immaterial and untouchable will find this an uncomfortable thought. Perhaps the truth is closer to both contemporary cognition research and the view of religious ascetics: vision becomes what vision sees. We are our senses. The screen’s ever-present and repetitive images communicate clichés with unprecedented efficiency, and humans are hardwired from childhood to imitate. At the same time, clichés inspire widespread ambivalence. While we reach for them to understand and be understood, they also inspire a sense of creeping panic and distaste. We want to fit in and communicate effectively, but we also want to be seen for who we ‘really’ are – and hold onto the belief that there remains something hidden and holy, something secret in this world.

Social media feeds are full of people moving with uncanny similarity, where shape often seems wholly unrelated to content. Consider one clip you might stumble upon on your screen: a member of the new profession ‘death doula’, a certified expert in sitting by people’s death beds, doing a TikTok-dance for the camera while she points, in synchrony, at text that appears on the screen to the beat – a list of things to keep in mind when somebody is dying. Her compassionate smile could be directed at a close friend, but her gestures and expressions copy previous imitations of movements that she has seen others do on other screens to direct the viewer’s attention to their makeup tips, children or trauma. The screen is a hagioscope for our time. An insistent metaphor rears up again: an inside that is forever revealed will ultimately turn into an outside, like an inverted version of the process of new cell formations being created in the womb. Instead of more rooms, fewer.

Published 13 March 2024

Original in Swedish

Translated by

Kira Josefsson

First published by Glänta (Swedish version) / Eurozine (English version)

Contributed by Glänta © Sanna Beijnoff / Glänta / Eurozine

PDF/PRINTSubscribe to know what’s worth thinking about.

From carbon absorber to emitter: monoculture, fires, disease and storms are reversing the European forest’s natural role as a Co2 sink. Read about the forests that threaten climate neutrality.

Although incoming migrants are demonized in political discourse, many European countries are struggling with a loss of population. In this episode of Standard Time, Eurozine’s colleagues talk about the idea of ethnic purity, outmigration, and finding a sense of belonging.