Olivier Jutel: How have you found the reception to your book Surveillance Valley and its central thesis that the internet is essentially a surveillance weapon?

Yasha Levine: My book has come at a really good time, right as people are becoming aware of the ‘dark side’ of the internet. Before Trump it was all good things: Facebook manipulation was a good thing when Obama used it. Surveillance Valley came out two months before the Cambridge Analytica story hit and everything I talk about is a preface to how personal data manipulation is central to our politics and economy. It’s sort of the whole point of the internet, going back 50 years to ARPANET. I hope the book fills some gaps in our knowledge because, as strange as it seems, we have forgotten this history.

The way the internet gets discussed, it’s often as if it were some immaterial phenomenon. What your book does is to explain the material, political and ideological origins of the network. Can you talk about the military imperatives it served?

One thing we have to understand about the internet is that it came out of a research project that started during the Vietnam war, when the US was concerned with counterinsurgencies all around the world. It was a project that would help the Pentagon manage a global military presence.

At the time there were computer systems coming online like ARPANET that functioned as the first early warning radar system in America to alert to a potential Soviet bombing raid. It connected radar arrays and computer systems to allow analysts to watch the entire US from a screen thousands of miles away. This was novel, as all previous systems relied on manual calculation. Once you can do that automatically it’s a totally new way of thinking about the world, because all of sudden you can manage airspace and thousands of miles of border from a computer terminal. This is in the late ’50s and early ’60s. The idea was to expand this technology beyond airplanes to battlefields and societies.

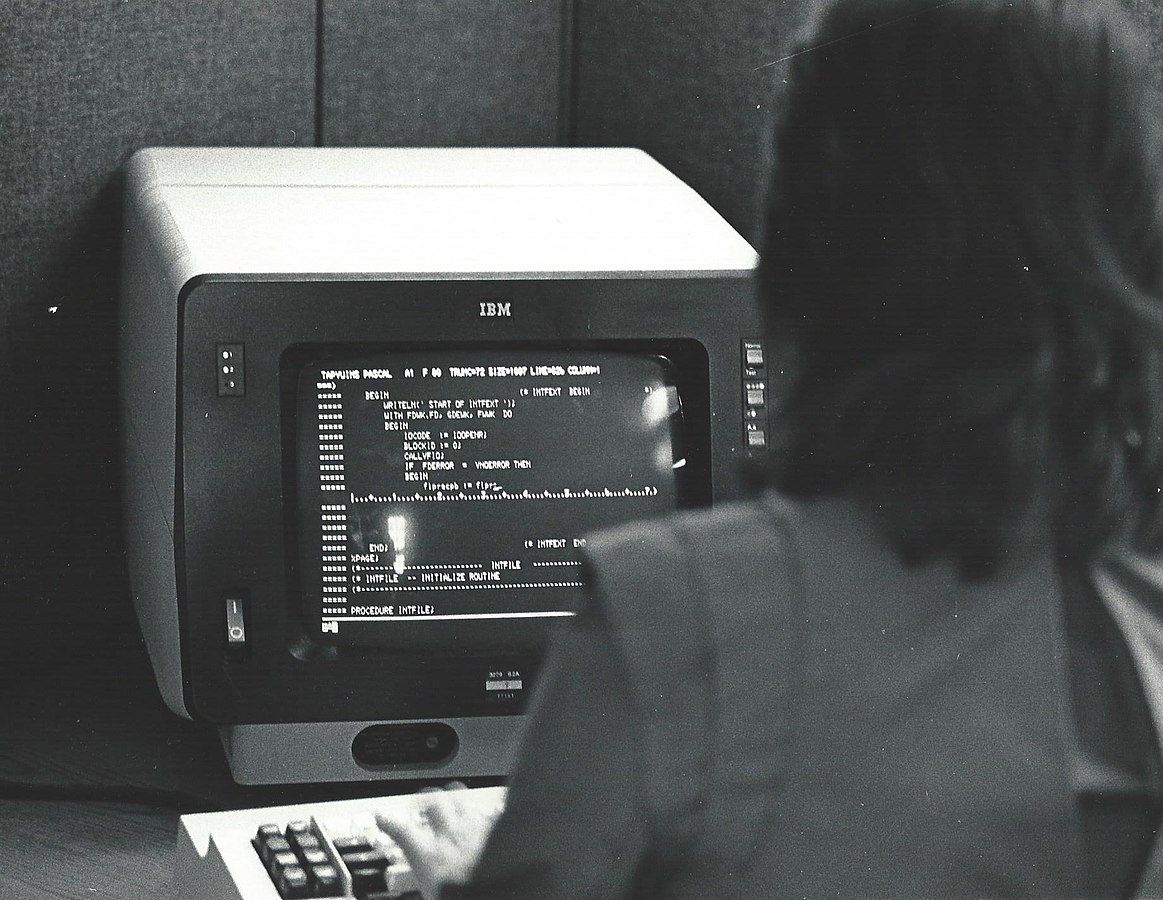

One of things that ARPA was involved with in Vietnam in the ’60s was ‘bugging the battlefield’, as they called it. They’d drop sensors into the jungle in order to detect troop movements hidden from aerial view. These sensors were wireless and would ping back information to a control centre with an IBM computer taking that information, mapping troop movements to help select bombing targets. This became the basis of electronic fence technology that was exported to the US and used on the border with Mexico. It’s still used today.

The internet came out of this military context and the technology that could tie different types of computer networks and databases together. At the time, every computer network was built from scratch in terms of network protocols and the computers themselves. The internet would be a universal networking language to share information.

There seems to be a contradiction in the ideological founding of the internet between anti-communist paranoia and liberal-libertarian optimism about information unlocking human potential. What do you make of this?

It seems like a contradiction, but it really isn’t. The spectre of communism led to the internet and helped accelerate this technology. In rarefied military circles, leftwing politics was seen as taking over the world, domestically too. After Vietnam, the question of counterinsurgency was how to placate societies without giving them what they want. They saw the problem as people not being managed properly: people have certain concerns, there is some inequality and material resources aren’t being distributed properly. America wasn’t seen as facing an ideological challenge or anti-colonial struggle; rather, it had a technocratic problem of management.

And so the computer networks, which became the internet, functioned as sensors in society in order to monitor unrest and demands. The information they ingested could be fed into computer models to map the potential path these feelings and ideas were going to take. Then you could say, ‘OK there’s a problem here; let’s give them a little of what they want’, or ‘Here’s a revolutionary movement; we should take out that cell’.

So the network would create a utopian world where you could manage conflict and strife out of existence. It would never come to armed conflict, since you had a better and kinder form of technocratic management.

I can’t help but think of Hillary Clinton’s tweet about the devastation of Flint, Michigan. ‘Complex intersectional problems’ like racial and class oppression are put into little problem-solving boxes for benevolent technocrats to workshop some ideas.

Yes, and this all begins in the 1960s. You mention the Democrats. There’s this guy Ithiel de Sola Pool, who was an MIT social scientist and a pioneer in using computer modelling, polling and simulations to run political campaigns. Relying on Pool, John F. Kennedy’s 1960 presidential campaign was the first to use modelling to guide outreach and messaging. What’s interesting is that Pool would go on to play a big role in ARPANET’s first surveillance project, which was then used to process surveillance data on millions of American anti-war protestors in the early ’70s.

He was also a guy who believed that the problem with international and domestic conflict was that government planners and business leaders don’t have enough information; that parts of the world were still opaque to them. The way to get rid of strife and have a perfect system was to have no secrets. He wrote a paper in 1972 where he claimed that the biggest impediment to world peace was secrecy.

If we could design a system where the thoughts and motivations of world leaders and global populations were transparent, then the ruling elite would have the information they needed to properly manage society. But he saw this in utopian terms: it’s better than bombing people. If you can influence people before they pick up Kalashnikovs and have to bombed, gassed and napalmed, it’s the better of the two systems.

How much does our own hyperactivity online, in seeking pleasure, or in scrolling the timeline just one more time like a slot machine, mirror the imperatives and failures of total information awareness? There is a capacity to collect individual pathologies and idiosyncrasies, but it fails in its own terms, right?

If your premise is wrong, whatever information you feed is always going to be wrong. The promise of ‘more information equals better management’ or a better society is where this falls apart. A lot of these cybernetic models and computer systems that are supposed to give managers a better view of the world have blind spots, or are susceptible to being manipulated, while giving the people using them the sense that they are in total control.

This is what happened with Hillary Clinton. Her campaign had the best minds of data modelling and, up until the end, their numbers told them that everything would be great. They weren’t even interacting with the real world anymore, but with their model. It wasn’t the electorate but their own idea of how the electorate would behave. They were fundamentally wrong.

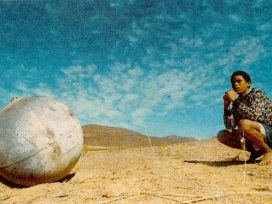

The idea that the more data you have the better your understanding of the world is wrong: data is only ever a representation of that world which is shaped by specific assumptions and values. Take the example of bugging the battlefield. The Viet Cong knew what was happening and saw these sensors. They could trick the system. They’d create vibrations and drive empty trucks by to get airstrikes on empty jungle, allowing the actual convoys to get through. The system was manipulated, but the planners saw the system as working perfectly, and thought they were annihilating the enemy. But the reality was that they were bombing empty jungle.

One of the bright spots of researching this book is that both the boosters and detractors of these systems have over-estimated the effectiveness of these networks. Take Donald Trump and Cambridge Analytica. For people who are horrified by Trump, Cambridge Analytica gives them a way of explaining how he get elected. They take all the anxieties and place it on this company that supposedly zombified the electorate through some Facebook posts.

When the network produces a social reality that we don’t like, it’s as if it were infected by an alien entity or virus. It’s similar to extreme anti-communism in this way.

Hang on a second, this is actually what Facebook wants advertisers to believe about its business. If you can convince voters to vote for Donald Trump just by scraping their profiles and showing them a few targeted ads, then as an advertiser or a political campaign you’ve got to put all your chips on Facebook. This is how powerful they are supposed to be. Putting all this at the feet of Cambridge Analytica is helping Facebook’s bottom line. It’s selling Facebook’s product; access to their user base, renting out users and selling targeted ads. Opponents of Facebook believe that they are much more effective than in reality they are.

An interesting story came out about Facebook in Wired that ads were sold cheaper to Trump than Clinton because of the kinds of user engagement Trump content generates. Trump voters tend to be pretty mad online. Does Facebook privilege seething emotion and the darker side of politics?

Yes, they want people stuck on their platform as long as possible. Anger, outrage, hatred is a big thing that keeps people online. I can tell you as a Twitter user that that’s true! If you are emotionally invested in something, you are engaged with it.

But what you’re describing is not something exclusive to Facebook. You can say cable news gave Trump airtime, covering every ridiculous statement, because of the extraordinary ratings. Like Facebook, everything is about ratings, because it’s all based on ad revenue.

But this is the minutiae. We conceive of the internet as the cloud, disconnected from physical space. But it’s private property, where we have no real rights as users. We exist in data centres and on the wires owned by giant corporations. We have no rights in that space, there is no right to be on the internet. These companies make the rules and we have no recourse. For people on the left thinking about this, it is clearly a toxic place; it has become a means for capital to further control our lives.

What would a holistic, public-oriented and left approach to this form of oligarchic power be?

This might be the hardest question of our time. You can’t focus on reforming the internet without looking at the broader cultural environment in which it exists. It’s a reflection of our values and political culture. It’s dominated by giant corporations, intelligence agencies and spies, because generally speaking our societies are dominated by those forces.

You can’t start with the internet, you have to start deeper: politics, culture. It’s a brutal analysis, sorry. Our conception of politics today is so crude. We are restricted to thinking that ‘we need to regulate something’, ‘we need to pass some laws’. We shouldn’t start with that, we need to start with principles. What does it mean to have communication technologies in a democratic society? How could they help create a democratic world? How does this democratic world take control of these technologies? How can we stop simply taking a defensive position? What does it mean to have an active pose? To have a political culture that says, ‘This is what we want technology to do for society’.

Everything that we’ve been sold about the democratic nature of the internet has always been a marketing pitch grafted on to the technology. To sell the internet as a technology of democracy when it’s owned by giant corporations is ridiculous. The only answer that I have is that we have to figure out what kind of society we want to have, and what kind of role technology can play to that end.