For Raphaël, In Memoriam

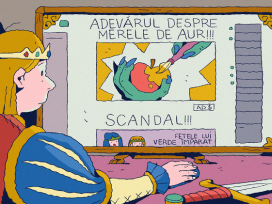

The internet has revealed to us the power that editors have: to decide whether what you say is true.

Lawrence Lessig

According to Zygmunt Bauman and Peter Sloterdijk, in the liquid universe of bubbles, the individual has absolute sovereignty over what he or she chooses to know. Mark Zuckerberg’s naivety, on the other hand, resides in his belief in the innocence of networks. A survey in the US has shown that during the final three months of the US presidential campaign, fake news was shared more widely and more often on Facebook than real information. But does that mean that Facebook has to act against its members? Is it a platform rather than an editor? More traffic means more advertising. For some advertisers, a more easily influenced readership is even better. In 2017 Facebook hosts over two billion active accounts, and its financial value surpassed $530 billion in 2017, an increase of forty per cent.

After Charlottesville, Zuckerberg spoke out against any form of discrimination. But the doors remain open for the unchecked diffusion of partisan rumours on an open network. In the face of such populism, how are we to restore, whether technically or legally, any kind of representative democracy? On the other hand, freedom of opinion is protected by the First Amendment to the American Constitution and no one wants to entrust to Facebook a mission to carry out universal censorship.

This crisis is also a crisis of the media: computer screens give way to smartphones, with their keyholes through which we all peer. Social media’s superiority derives from the possibility it affords of sharing gossip with a multitude of unknown persons. The virtuous revolt against fake news is part of a defence mechanism on the part of elites and poses a problem that we are far from resolving, despite Mark Zuckerberg’s best intentions.

Reliability engines

We need search engines, of course. But it is just as crucial to have access to reliability engines. The rush to invest in such things has already begun. The initiative of George Soros and Pierre Omidyar, the beta version of which was tested by the Guardian, is one of the contenders. It is intended to help monitor politicians through the intermediary of NGOs based in London. Alongside the UK, it will also cover Latin America and Africa and works in English, Spanish and French. It correlates news programmes, parliamentary broadcasts and newspaper articles in real-time with reliable statistical databases that can confirm or refute claims made by politicians. This will help journalists, who often lack access to references.

In the longer term, why not extend this technology to Facebook and Twitter? Tim Berners-Lee, creator of the World Wide Web, recently advocated working together with the largest companies: ‘We have to fight against disinformation by helping points of entry into the web in the form of Google and Facebook to continue their efforts to solve this problem but, at the same time, we must avoid creating any central institution that would decide what is ‘true’ and what is ‘false’. Google and other large organizations are making a start: in August 2017, Mozilla announced a new program to combat disinformation.Jimmy Wales (Wikipedia), meanwhile, has urged social networks to become more collaborative and more transparent as a way to counter disinformation: ‘Technology platforms can choose to expose more information about the content people are seeing, and why they’re seeing it. We need this visibility because it sheds light on the process and origins of information and creates a structure for accountability.’ In France, too, Le Monde is drawing up a scale of website reliability.

Pressure on Facebook from civil society and democratic associations is therefore mounting. This is the part of the establishment that Trump supporters loathe. A year after Trump’s victory, the inquiries into his links with Russia indicate that the civil war being waged through the media is still continuing. Commenting in August 2017 on evidence that Facebook had been used by Russia to spread disinformation during the 2016 election campaign, Tom Kellerman, an online security expert who first warned the White House about Russian attempted cyber-attacks in 2015, argued that: ‘It’s not in Facebook’s best interest to acknowledge the fact that they have been used like this … Facebook is so profitable because of the marketing aspects of the platform. And that marketing engine, that footprint, and the implicit trust that people place in them, it was essentially used against the American marketplace.’ Following Facebook’s acknowledgement in May 2017 that (at that time) unspecified political actors had been carrying out ‘information operations’ using Facebook, internet researchers Philip Howard and Robert Gorwa called for Facebook to open up its metadata: ‘Their data scientists could probably provide some insights that the intelligence services cannot.’

Google and Facebook, which have benefited massively from the transfer of advertising from press websites to their own platforms, are now asking the newspapers for help in flushing out the fake news that they themselves are hosting. According to Mark Zuckerberg, ‘We do not want to be arbiters of truth ourselves, but instead rely on our community and trusted third parties. […] The most important thing we can do is improve our ability to classify misinformation.’ Facebook has announced that it will detect and indicate any suspect pages, add better-quality links and rely on third parties such as Snopes, an NGO that is active in the field of detecting digital misinformation. The company will also refuse to accept any advertising budgets from those who are linked to fake news. Thousands of fake accounts have been closed in France, although articles linked to them still exist in the US, Germany, France, the Netherlands, etc. After Charlottesville, the suppression of pages on the Daily Stormer website or tweets from US-based white supremacist Chris Cantwell on Twitter, Facebook and YouTube are obvious instances of the contradictions facing service providers who want to avoid being seen as interfering but nevertheless respond to the demands of their public and their investors. Does that mean that Facebook is turning into an editor?

In February 2017, for the benefit of his critics, Mark Zuckerberg published Building Global Community, a declaration of Facebook’s allegiance to communitarian values, which would be served among other things by stricter checks on the validity of information circulating through its channels. This was at the time that the NASDAQ index was laying bets on Facebook’s profits: its growth had been enormous, showing two billion active accounts in June. Facebook had raised its advertising tariffs by 25 per cent and its return on investment had risen to 45 per cent. The company was close to becoming the most powerful enterprise on the planet. Investors’ confidence carries much more weight than the views of critics. Flagging up suspect pages has little effect on extremists, because the documents will be published elsewhere. And are credulous readers really going to take time to read articles published alongside disinformation? Moreover, Facebook wants to avoid any editorial role that would make it responsible for the content that is circulated. The only material that will be filtered out is whatever is illegal everywhere and is clearly intended to do harm by misrepresenting incontestable facts. What, then, is the point in laying down principles for social media communities? No to mention that principles are the opposite of the company’s behaviour in the stock market: laissez-faire on the one hand, collective responsibility on the other?

Accelerationism vs. representation

According to Zuckerberg, after tribes come ancient cities, democracies and then… the Facebook community. Following the accelerationist paradigm developed by Nick Land, fake news is the product of an intensified form of capitalism that sees speed as its only principle. Trump and Facebook are contemporaries. Paradoxically enough, Zuckerberg invokes a traditionalist ideology. Such discrepancies are not surprising from an accelerationist perspective:

‘Accelerationists argue that technology, particularly computer technology, and capitalism, particularly the most aggressive, global variety, should be massively sped up and intensified – either because this is the best way forward for humanity, or because there is no alternative. Accelerationists favour automation. They favour the further merging of the digital and the human. They often favour the deregulation of business, and drastically scaled-back government. They believe that people should stop deluding themselves that economic and technological progress can be controlled. They often believe that social and political upheaval has a value in itself. […]’

‘We all live in an operating system set up by the accelerating triad of war, capitalism and emergent AI,’ says Steve Goodman, a British accelerationist. ‘Like it or not,’ argues Steven Shaviro, in his 2015 book on the movement, No Speed Limit, ‘we are all accelerationists now’. ‘In Silicon Valley,’ says Fred Turner, a historian of America’s digital industries, ‘accelerationism is part of a whole movement which is saying, we don’t need [conventional] politics any more, we can get rid of “left” and “right”, if we just get technology right. Accelerationism also fits with how electronic devices are marketed – the promise that, finally, they will help us leave the material world, all the mess of the physical, far behind.’

In their Accelerate Manifesto, Alex Williams and Nick Srnicek write that ‘We need to posit a collectively controlled legitimate vertical authority in addition to distributed horizontal forms of sociality, to avoid becoming the slaves of either a tyrannical totalitarian centralism or a capricious emergent order beyond our control. The command of The Plan must be married to the improvised order of The Network.’ That is, near enough, what Facebook has done. Facebook is a public service, controlled by its board of directors, one that levies a kind of tax on commercial services wherever it deploys its ‘communities’ and sells its advertising space.

But there are still a few bugs. During the American election campaign, Facebook censored a Pulitzer prize-winning photo that showed a group of children in rags, including a naked little girl. It was taken during the Vietnam War, and the children were walking along a road, accompanied by some American soldiers. The Norwegian user who posted the photo had his account blocked. Facebook also removed the posts of newspapers that had re-published it in the first place. The Norwegian government protested and Facebook apologized. The intention behind this photo was to show the gratuitous cruelty of war. History is made up of documents that tell of horrific events. Should we censor them too?

Facebook’s inability to distinguish the sense of each and every post mean it has an inbuilt flaw: how is it possible simultaneously to ensure freedom to share and freedom of speech? Facebook applies a filter in Pakistan, in Turkey, in Russia – is it easier to acquiesce with censorship than to accept democratic responsibility? Along with freedom of expression and opinion come bubble effects – and that is when chimeras make their appearance. The hiatus between the First Amendment to the American Constitution and efforts to ensure inclusion and citizenship is editorial in nature. Facebook’s reply is that you have to be prepared to accept less restrictive rules when you are processing images and speech. According to Zuckerberg, we should be moving towards ‘a system of personal control over our experience’ by applying differing options depending on the content that each requires or excludes. These statements constitute Facebook’s new horizon, which is open to a ‘greater proportion of newsworthy and historical content’.

Are we witnessing the beginnings of greater user control over the service? The dream would be to find a way of supporting not only what a majority would accept, but what minorities demand. At present, however, there is such a large element of chance in the links promoted or suppressed that standards of ‘community governance’ appear inadequate. So is Facebook going to stop censoring those occasionally shocking works of art that form part of world culture? What about violent images that have an important historical significance? On the other hand, how are we to handle extremist propaganda and deliberate provocation? Horrific events happen across the world; should we show them, and can we do so without making editorial choices? A global communications company based in California in the era of Donald Trump cannot shy away from such debate.

Facebook’s lax editorial practices

According to Zuckerberg, ‘Over the next year, we’ll be adding 3,000 people to our community operations team around the world – on top of the 4,500 we have today – to review the millions of reports we get every week, and improve the process for doing it quickly. If we’re going to build a safe community, we need to respond quickly. We’re working to make these videos easier to report so we can take the right action sooner – whether that’s responding quickly when someone needs help or taking a post down.’ But when The Guardian newspaper interviewed a Facebook content moderator, they found that ‘he wasn’t given any mandatory counselling, although his company offered mindfulness sessions every few months and there was access to a counsellor on request. However, he said, the contracted workforce, many of whom were recent immigrants with limited English skills and who were hired to work in their native language, would choose to seek psychological help in their personal time rather than asking for help internally for fear of losing their jobs or being sent home without pay.’

Zuckerberg also said: ‘While we don’t write the news stories you read and share, we also recognize we’re more than just a distributor of news. We’re a new kind of platform for public discourse – and that means we have a new kind of responsibility to enable people to have the most meaningful conversations, and to build a space where people can be informed.’ The fact-checking announcement made on 15 December 2016 was a turnaround from 12 November, just days after Donald Trump won the election, when Zuckerberg said of Facebook: ‘I believe we must be extremely cautious about becoming arbiters of truth ourselves.’ Such contradictions reveal the problem: Facebook is incapable of refereeing its own pages. We are only at the beginning of the debate about Facebook’s editorial role.

The companies that work for Facebook are sceptical. Aaron Sharockman, the executive director of PolitiFact, the Pulitzer Prize-winning site that fact-checks for Facebook, said that by the time his reporters debunk an article, it could have been up for several days to a week or more, meaning the effect of the flag may be limited. ‘We don’t have a great sense of the impact we’re having,’ he said. The documents to which Facebook’s auditors have access display a kind of deliberately slapdash approach worthy of one of ‘merchant companies’ that Adam Smith accused, back in the eighteenth century, of behaving like absolute monarchs without consideration of the interests of the population as a whole. Because it is swamped by the torrent of data that passes through its servers, Facebook neither sees nor analyses all the material or all the links. Even if it were possible, the discernment required would be lacking. Social networks will only bow to legal requirements. Facebook will only hide or remove Holocaust denial content. This is not on grounds of taste, but because the company fears it might be sued. Facebook states: ‘We believe our geo-blocking policy balances our belief in free expression with the practical need to respect local laws in certain sovereign nations in order to remain unblocked and avoid legal liability.’ As a result, ‘there is an apparent disinterest from the social media firms in how their networks were being used. Facebook, for instance, leaves most of its anti-propaganda work to external organizations such as Snopes and the Associated Press, who […] seem unwilling to take down automated accounts engaging in political activity. Researchers from the Oxford Internet Institute did find one country to be significantly different to the others. In Germany, fear of online destabilisation outpaced the actual arrival of automated political attacks and has led to the proposal and implementation of world-leading laws requiring social networks to take responsibility for what gets posted on their sites.’ Computational propaganda is now one of the most powerful tools against democracy. Social media firms may not be creating this nasty content, but they are the platform for it. They need to significantly redesign themselves if democracy is going to survive social media.

Bursting the bubble

Addressing in particular the European public, which was shocked by the filtering carried out by Facebook and was opposed to censorship in general, Mark Zuckerberg indicated that the European regional publication standards would accept freer standards providing that they did not create polemic. Segmentation of the public into communities that are unaware of each other is at the heart of a strategy that combines targeted advertising with personalized systems of regulation.

But how is it possible to widen the spectrum of documents and to control the radicalization of online exchanges? According to Zuckerberg: ‘Our approach will focus less on banning misinformation, and more on surfacing additional perspectives and information, including that fact checkers dispute an item’s accuracy. While we have more work to do on information diversity and misinformation, I am even more focused on the impact of sensationalism and polarization, and the idea of building common understanding. Social media is a short-form medium where resonant messages get amplified many times. This rewards simplicity and discourages nuance.’

A classification algorithm also reveals an incorporated vision of the world; what is required is editorialization of the informational loops. The whole notion of restricted pluralism makes no sense: you would have to know the number of times each link was shared, the number of accounts, in order to relativize certain pages or to alert readers to dangerous items of disinformation. Facebook might occasionally indicate that some document or other is indexed but rejected ‘off-Facebook’.

Fakebook or meaningful groups?

Zuckerberg claims to be associating the model of mass-market television with civil movements. He cites Tahrir Square or the Tea Party. But, when it came to an insurrection happening in a dictatorship better prepared than was Tunisia under Ben Ali, digital access is rapidly blocked. Tahrir Square has also become symbolic of liberty being trampled on in Egypt, a country in which Facebook submits to control by the authorities. Facebook is regularly accused of being prepared to bend to the Chinese government’s censorship requirements.

Facebook’s plan uses what is more or less the structure of Maslow’s pyramid: the base is action within the field of physical safety and health, the intermediary stage is the support given to credible information. The horizon would then be greater inclusion within a global community. Is this credible? Friends of long standing, who live under the influence of information diffused in Russia or in Ukraine, have voiced their disagreement on Facebook and have broken off relations with each other. Oxford researchers consider that Ukraine was used as experimental terrain for Russian strategy. What kind of democratic confrontation can you have between divergent viewpoints?

If, out of necessity, Facebook becomes a control organization, this can scarcely be of help to meaningful critical or hard-hitting groups, to groups that are less consensual and more ironic or creative. Facebook is in thrall to the dominant order. After the newspeak that is associated with state totalitarianisms, what we have here is a new form of confrontation in which the protagonists never actually meet each other. Each of them is travelling in their own vehicle, but snatches of thoughts are accessible to others. Discussion is obsolete except in the form of indirect commentary. The operator can, at any time, disconnect a transmitter or make it impossible for it to be heard. He can also create or promote conversations, but there is no public forum for discussion. Only the circulation of data is real. A few emancipated individuals will be able to participate in more structured, minority discussions; they might even be able to conceive of a democratic space in which opposing views might be brought into confrontation. But, if so, that would mean leaving the motorway, exiting from the flow of traffic, forming real communities and not just peer groups.

Facebook refers to nothing outside itself. An authentic dialogue, however, presupposes exteriority. Does Facebook really support communication and exchange within the society of which it is the image? Our epoch is characterized by deep social injustices and by a form of mental isolation. Zuckerberg’s plea in favour of inclusive communities is just a pious hope: such communities intensify peer character. Is Facebook really going to counteract the loss of community life by providing an equitable form of empowerment? Zuckerberg would stand a better chance of fulfilling this aim by paying his tax bills than through the magic of using words to generate income.