As AI technology permeates our lives, debates intensify over its cultural impact. Springerin explores how AI is reshaping creativity and power dynamics, moving beyond binary views of AI as either saviour or oppressor and understanding broader changes in society in the interplay between human and machine intelligence.

The development of AI could have taken a different direction, argues Clemens Apprich. ‘Almost exactly 20 years ago, the introduction of social media led to a veritable explosion of data; based on increasingly powerful hardware, this led to the breakthrough of neural networks – and thus the connectionist worldview.’

For connectionists, neural networks work like the human brain, deriving patterns from existing data to make predictions. Symbolists, on the other hand, insist on training neural networks in formal logical operations. The superiority of connectionist models became apparent in 2016 with the launch of Google Translate, which made translations more natural.

Yet connectionist AI is not without its flaws. Based on inductive logic, it ‘establishes the past as an implicit rule for the future, which ultimately leads these models to reproduce the same thing over and over again’. In substituting future possibilities for the repetition of the past, harmful stereotypes are perpetuated.

Apprich reconciles the symbolist–connectionist conflict by introducing a third party: Bayesian networks. These ‘are based on the principle that the past does not simply generate predictions, but that the prediction is intuitively inferred’. This form of reasoning is creative, breaking existing patterns and serving as a source of new ideas.

The potential for machine creativity may depend on how technology is applied in the future. Apprich wants to reclaim intuition and idleness as integral to human creativity, as well as the collective nature of intelligence – in which machines can take their place.

AI and neocolonialism

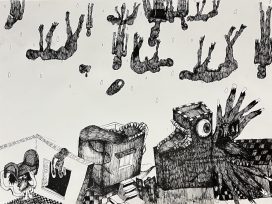

The Middle East has long been a testing ground for western technologies, particularly in the fields of aerial photography. Now it is the turn of AI-driven surveillance systems. Anthony Downey explores how this is changing the neocolonial project. ‘Colonization by cartographic and other less subtle means was about extracting wealth and labour; neocolonialism, while still pursuing such goals, is increasingly concerned with automated models of predictive analysis.’

To predict events, AI is used to analyse and interpret visual data. Formerly creators, humans become mere functions within algorithm-driven systems, as the philosopher Vilém Flusser and the filmmaker Harun Farocki both anticipated. Tech companies such as Palantir are developing AI systems for military use, enabling autonomous weapons to ‘see further’ and react faster than humans. But the ethical implications of AI-based decision-making in warfare have yet to be fully understood.

Downey draws a parallel between historical and modern forms of control in the Middle East. ‘Contemporary mapping technologies, developed to support colonial endeavours and the imperatives of neocolonial military-industrial complexes, extract and quantify data using AI to project it back onto a given environment.’ This creates a continuous feedback loop, in which the algorithmic gaze dictates future actions, reinforcing the power structures of neocolonialism.

AI and sci fi

Fear is a common response to the explosion of AI. Louis Chude-Sokei recalls the long tradition of literature and film in which technology is depicted as hostile to humans. Technophobia is not always rational and is often fuelled by other prejudices. Sci fi writers William Gibson and Emma Bull, for example, have portrayed AI as powerful African deities threatening the old religious order. ‘The two fears – of race and technology – merge and reinforce each other.’

As for the bias inherent in AI itself, it’s not just racial – discrimination based on gender, disability and other things also comes into play. When decision-making power is transferred to algorithms, there is no one to hold accountable. This kind of machine autonomy, Chude-Sokei insists, is far more threatening than doomsday stories about robots overtaking humans.

There is still hope that actual human practice can bring contingency to the use of technology. This happened in the 1970s, for example, when synthesisers were customised to the whims of electronic music producers. So long as AI hasn’t brought about the end of humanity, it may well be rewired for the better.

Review by Galina Kukenko